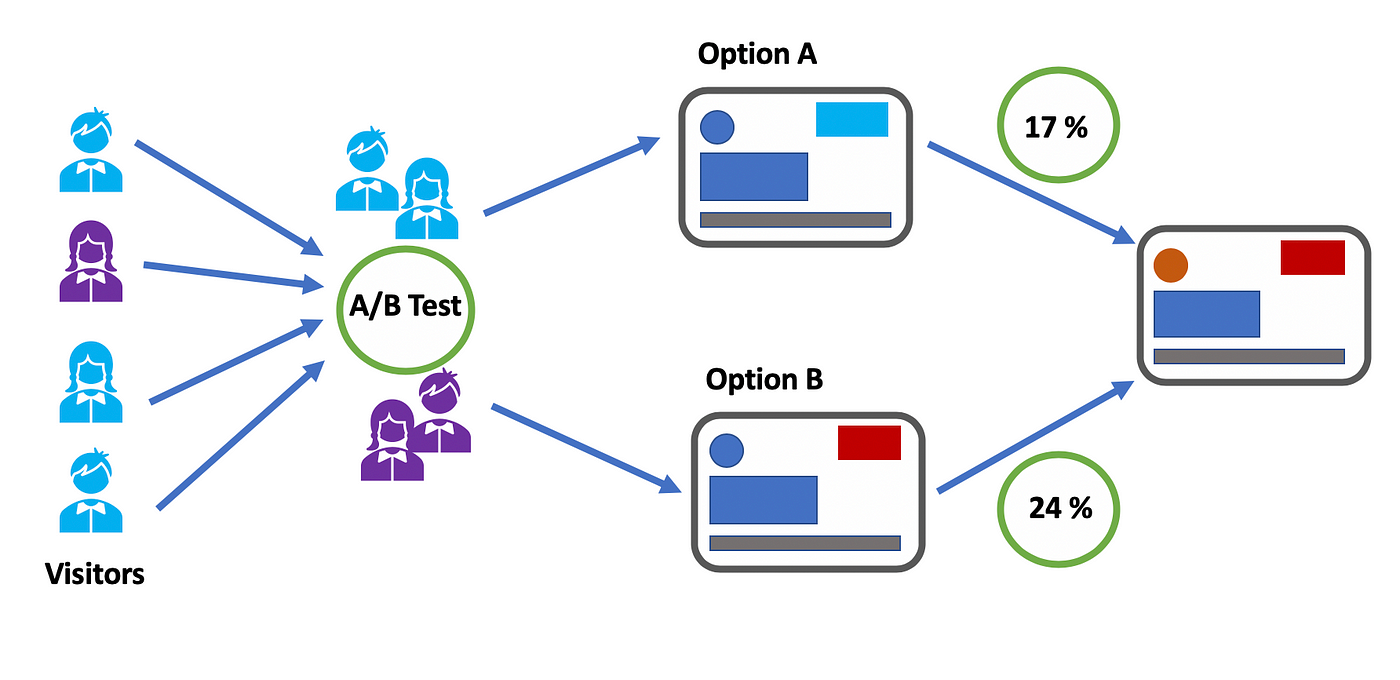

A/B testing, often split testing, is an invaluable strategy in the digital marketing toolkit that allows marketers to make data-driven decisions. By comparing two webpage versions, email, or ad, marketers can see which performs better and improve their strategies accordingly. This method applies to layout designs and extends to content, images, call-to-action buttons, and more, making it a versatile tool for optimization across various digital platforms.

In the chaotic world of digital marketing, where trends come and go in the blink of an eye, one thing remains constant — the quest for optimizing user experiences and driving conversions. One of the most powerful tools in the marketer’s arsenal is A/B testing. A/B testing, or split testing, is the scientific method of comparing two versions of an element to see which one performs better. By making incremental changes and measuring the impact, businesses can make data-driven decisions that can significantly improve their key performance indicators.

Table of Contents:

Why A/B Testing Matters in the Digital Space

In today’s digital landscape, where every click, view, and engagement can be quantified, A/B testing is a beacon of empirical decision-making. It matters because it transcends guesswork and intuition, offering concrete data on what truly resonates with audiences. This approach enhances user satisfaction and directly impacts a company’s bottom line. A/B testing allows for the meticulous refinement of user experiences, ensuring that every element of digital content is tailored to meet the preferences of its target audience. From optimizing landing pages for higher conversion rates to tweaking email campaigns for better open and click-through rates, A/B testing provides the insights needed to elevate a brand’s digital presence. By systematically identifying and implementing the most effective strategies, businesses can improve online visibility, engage more deeply with customers, and drive more efficient and successful marketing outcomes.

A/B testing is an invaluable asset for digital marketers. Its results can illuminate the best path forward, dramatically affecting revenue, user engagement, and market share. In its simplest form, A/B testing allows you to see the real-world impacts of any change to your user experience — from the color of a button to the entire page layout.

Unlike other modes of analysis, A/B testing gives clear, immediate feedback without speculation. When appropriately deployed, A/B testing can negate the guesswork involved in user behavior predictions. By directly measuring the change on a portion of your audience, you can confidently adjust your strategy based on the data.

Understanding A/B Testing

At its core, A/B testing involves a straightforward process but requires a thoughtful approach to yield valuable insights. The process starts with identifying goals, from increasing website traffic to boosting email newsletter subscriptions. Once the objective is clear, the next step involves creating two variants (A and B) of the digital element. These variants differ in one or several aspects, such as headline text, button color, or image placement, allowing marketers to isolate the effect of that specific change on user behavior.

Following the creation of these variants, the audience is randomly divided to experience either version. This distribution ensures that each group is statistically similar, enabling an accurate comparison of the performance between variant A and variant B. Performance metrics, dependent on the initial goal, are then meticulously tracked and analyzed. This could include metrics like click-through rates, conversion rates, or any other relevant indicator of success.

The beauty of A/B testing lies in its simplicity and its power. By directly comparing two versions of a piece of content, marketers can eliminate uncertainty and make informed decisions that enhance the user experience and contribute positively to the business goals. Importantly, A/B testing is an iterative process. Insights gained from one test can lead to further questions and subsequent tests, creating a continuous improvement and optimization cycle.

At its core, A/B testing is a process of making comparisons. You take an existing version (A) and create a new version (B) with a single variation. You then direct equal traffic to A and B and determine which version performs better.

Benefits of A/B Testing

One of the primary benefits of A/B testing is increased user engagement. By tailoring content, designs, and features to meet the preferences of your target audience, you naturally enhance the user experience, leading to higher levels of engagement. This can result in more time spent on your website, higher click-through rates on your emails, and improved interaction with your brand across digital platforms.

Another significant advantage is higher conversion rates. Through meticulous testing and optimization, businesses can identify the elements that most effectively encourage users to take desired actions, whether purchasing, signing up for a newsletter, or filling out a contact form. This directly impacts the company’s bottom line, turning casual browsers into valuable customers.

Furthermore, A/B testing can reduce bounce rates and exit rates. By conducting tests on different segments of your website or digital marketing channels, you can understand what keeps users interested and causes them to leave. This information is crucial for creating a seamless user experience that captures and retains attention.

The primary benefits of A/B testing are its objectivity and precision. It allows you to measure precisely how much an alteration to your marketing or product has influenced user behavior. Here are some of the most significant benefits:

- You can prove (or disprove) the value of changes with empirical evidence.

- It can help improve the user experience, leading to better customer satisfaction and potentially increased lifetime value.

- A/B testing can result in significant revenue increases, as even small changes that improve conversion rates can translate to more sales or leads.

The A/B Testing Method

The A/B Testing Method begins with formulating a hypothesis based on observations, analytics, or customer feedback. This hypothesis aims to improve a specific metric, whether it be enhancing user engagement, increasing conversion rates, or any other key performance indicator relevant to the business’s goals. Once the hypothesis is established, the actual testing phase can begin.

Two versions of a web page, email, or other digital asset are created: Version A (the control) and Version B (the variant), which includes the change hypothesized to improve performance. Traffic is split evenly between these two versions to ensure each receives a similar audience regarding demographics and behavior.

The performance of each version is meticulously monitored to collect data on various metrics, such as conversion rates, time spent on a page, and bounce rates. Advanced analytical tools are often used to accurately measure and compare the control and variant metrics. This ensures that the results are statistically significant, providing confidence in the data collected.

The outcome of A/B testing can lead to a clear decision on whether the change should be implemented across the board. If Version B outperforms Version A, the new change is adopted. However, if no improvement is noted or Version A remains superior, the hypothesis is rejected or revised for future testing.

The A/B testing method follows a clear set of steps:

- Formulate a hypothesis: Identify an area of improvement and formulate a hypothesis about what change could lead to a better outcome.

- Design the experiment: Create a variation of the element you want to test and ensure it’s directly comparable.

- Randomize and split traffic: Direct an equal, random segment of your audience to each version.

- Gather data and analyze the results: Observe user behavior and analyze the data to see which version performed better.

- Draw conclusions and iterate: Use your findings to decide the tested element and start the cycle again.

Designing A/B Testing

Designing practical A/B tests requires a keen understanding of your objectives, a clear definition of success, and meticulous planning. The initial step involves ensuring that the test focuses solely on a single variable to accurately isolate its impact. This could involve changing the color of a call-to-action button, altering the headline of a landing page, or testing different email subject lines. It’s crucial to make only one change at a time, as this allows you to attribute any differences in performance directly to that specific modification.

Next, determine the sample size and duration of the test. These factors depend on your website’s traffic or email campaign size and the expected impact of the change. Utilizing statistical power calculations can help in deciding how long to run the test to achieve reliable results without under or overestimation.

Before launching the test, establish your metrics for success, whether it’s conversion rate, click-through rate, or another relevant metric. This clarity will guide you in evaluating the outcome objectively.

Finally, the test can be implemented using A/B testing software or tools that ensure accurate traffic splitting and data collection. Monitoring the test’s progress and being prepared to adjust for any unforeseen circumstances is integral to maintaining the test’s integrity.

Upon completion, analyze the data collected to ascertain which version achieved the predetermined success metrics. This analysis will assess if the hypothesis holds up and help decide if the change should be embraced long-term, adjusted, or let go. Through rigorous A/B testing, digital marketers can continually refine and enhance their strategies, driving superior results and achieving sustained business success.

These are the most critical guiding principles for designing your A/B tests to ensure the validity and reliability of your results.

Know Your Goals and Formulate Hypotheses

Understanding your goals and formulating precise hypotheses are pivotal steps in the A/B testing. Start by identifying clear, measurable objectives. What do you wish to achieve with this test? Is it to increase the sign-up rate, boost email open rates, or perhaps enhance the average order value? Having a definitive goal in mind focuses the test and aligns it with your broader business strategies.

After defining your goal, brainstorm hypotheses that could enhance your key metric. A theory should be specific, testable, and based on insights from your data analytics, user feedback, or industry best practices. It’s not just about guessing; it’s about making educated assumptions. For example, aim to increase newsletter sign-ups. Your hypothesis might be that adding a testimonials section to the sign-up page will build trust and improve conversion rates.

This methodical approach ensures that every A/B test you conduct is purposeful and grounded in your business objectives, maximizing the chances of uncovering valuable insights that can drive significant improvements in your digital marketing outcomes.

You must have a clearly defined goal for what you want to achieve through the A/B test, and your hypothesis should directly relate to that goal. Without this clear direction, you run the risk of misinterpreting the data.

Selecting Variables to Test

When it comes to A/B testing, selecting variables is a critical step that can significantly influence the outcomes of your experiments. The variables range from design elements like button colors and font sizes to content-specific features such as call-to-action (CTA) text or image placements. To decide on a variable to test, consider areas where user engagement metrics suggest room for improvement or leverage user feedback and heatmap analysis to identify potential friction points.

Upon identification, it is crucial to precisely outline the presentation of each variation of the variable. For instance, if you’re testing the color of a CTA button, Variation A might use a blue button, while Variation B uses a green button. The key here is consistency across other elements, ensuring that any observed outcome differences can confidently be attributed to the tested variable.

Remember, selecting variables for A/B testing isn’t just about seeing which Variation performs better in the short term. It’s also about gaining deeper insights into what resonates with your audience, enabling more informed decisions that contribute to a more effective and engaging user experience in the long run.

You need to select the right element to test. Ensuring that the component under comparison significantly impacts the user behavior you aim to optimize is vital.

Implementing A/B Testing

Implementing A/B tests efficiently requires a seamless integration of technology, clear communication among team members, and a structured approach to deployment and analysis. Begin by setting up the variations within your A/B testing tool. Each variant should be identical except for the element you’re testing. This clarity guarantees that any variations in performance can be precisely linked to that specific element.

Next, segment your audience to ensure that each group receives only one version of the variable. This segmentation can be random or based on specific criteria, depending on the test’s objectives. It’s also essential to decide on the proportion of your audience exposed to each variant. A 50/50 split is standard, but adjustments may be necessary based on the size of your audience and the expected effect size.

After launching the test, monitor performance closely to ensure data integrity. Be vigilant for technical glitches that may distort results, and be ready to tweak as necessary. Throughout this process, maintain open lines of communication with all stakeholders to share progress and insights.

Finally, after reaching the predetermined end date or sample size, halt the test and proceed with data analysis. This phase is critical in understanding the impact of the variable tested and requires a meticulous approach to ensure the accuracy and relevance of the findings. Remember, successful implementation of A/B tests lies in the precision of setup, diligence during the testing phase, and thorough analysis of results.

Now you know what to test and why, it’s time to execute. This section will guide you through the practical considerations of running your A/B tests.

Tools and Platforms for Testing

Choosing the right tools and platforms for your A/B testing is crucial for ensuring that your tests are practical, manageable, and yield actionable insights. A myriad of A/B testing tools are available, ranging from comprehensive digital marketing platforms to more specialized software focused solely on testing. When selecting a tool, consider factors such as the complexity of your tests, integration capabilities with your existing tech stack, the scale of your audience, and, importantly, the level of analytics and reporting the tool provides.

Popular tools like Optimizely, VWO (Visual Website Optimizer), and Google Optimize offer robust testing capabilities and detailed analytics to help you understand the impact of your tests. These platforms allow for testing everything from simple text changes to complex behavioral triggers, providing the flexibility needed to tailor your testing approach to your specific business requirements.

Additionally, ensure that the tool you choose allows for easy setup and management of tests, supports the segmentation of your audience for targeted testing, and offers dependable customer support. The goal is to find a tool that meets your current testing needs and can scale with your business as it grows and your testing strategies become more sophisticated.

There are numerous tools and platforms available for A/B testing. Whether you choose a simple page-testing tool or a comprehensive suite that integrates into your data ecosystem, selecting the right platform is crucial for proper test execution.

Best Practices for Accurate Results

To get reliable results, remember these best practices:

- Ensure your sample size is large enough to minimize the chance of random variability skewing the results.

- Run tests long enough to capture changes in user behavior but not so long that they’re influenced by seasonal or external factors.

- Validate that your testing tool is set up correctly to track all necessary conversions and associated metrics.

Analyzing Results of A/B Testing

Analyzing the results of your A/B tests is the most critical step, as it allows you to draw actionable insights and make data-driven decisions. Start by reviewing the performance of each variant against your key performance indicators (KPIs). Look for statistically significant differences that indicate one Variation performed better.

Utilize the analytics tools within your testing platform to compare conversion rates, click-through rates, or other relevant metrics directly related to the tested variable. It’s essential to go beyond surface-level analysis and dig into the data to understand why a variation performed the way it did. Consider segmenting your data further to uncover insights about how different user groups responded to each Variation.

Remember, the goal of A/B testing isn’t just to find a “winner” but to learn about user preferences and behavior. Even a test with no significant difference between variations can provide valuable insights. Documenting your findings, sharing them with stakeholders, and using this knowledge to inform future tests and optimization strategies is crucial.

Interpreting results is arguably the most complex part of A/B testing. It’s the moment of truth when you decide which version indeed performed better and why. This section will walk you through the statistical significance and offer guidance on making sense of your data.

Interpreting Data and Statistical Significance

Statistical significance is a measure of the probability that the observed differences are not due to random fluctuations but are actually a result of the changes made. This concept is crucial, as it determines whether you can trust the results to make a decision.

Making Data-Driven Decisions

Once you’ve determined statistical significance, it’s time to understand why one version performed better and apply these insights to your overall strategy.

Optimizing Based on Findings

The process of optimization based on your A/B testing findings is a continuous loop of refinement and improvement. After identifying which version of your test performed better, the next step is to implement the successful elements broadly across your marketing campaigns, product features, or website design, depending on the test context. However, the work doesn’t stop there.

Following implementation, monitoring the broader impact of these changes is essential. This includes immediate success metrics and long-term indicators such as customer satisfaction, retention rates, and overall engagement. It’s also an opportunity to brainstorm additional features or elements to test, pushing further for optimization.

Remember, A/B testing is not a one-time activity but a philosophy of continuous improvement. Each test provides valuable insights, not just about what works but also about your audience’s preferences and behaviors. Leverage these insights to inform your strategy, foster innovation, and maintain a competitive edge.

By adopting a methodical approach to A/B testing, analyzing results carefully, and applying findings intelligently, businesses can enhance user experience, boost conversion rates, and ultimately achieve their strategic goals.

Implementing Changes for Improvement

Implementing changes based on A/B testing insights involves altering elements on your website or product and understanding the broader implications of these changes on your overall strategy. When you decide to implement a successful variation, consider how it aligns with your brand identity, user expectations, and long-term business objectives. It’s critical to roll out changes seamlessly to the user, ensuring that the improved elements enhance the user experience.

Furthermore, it’s essential to communicate the reasoning behind these changes to your team and stakeholders. Sharing the insights and data that led to the decision will foster a culture of data-driven decision-making within your organization. Continual education on the impact and importance of A/B testing helps to align teams towards common goals and encourages a collaborative effort in optimizing the user experience.

After implementation, closely monitor the performance of the newly adopted changes. This is not the end but rather the beginning of another cycle of testing and optimization. The digital landscape constantly evolves, and what works today may not be effective tomorrow. Stay vigilant, continue to test, and use data to guide your decisions, ensuring ongoing improvements and sustained success in your strategies.

Iterative Testing for Continuous Optimization

Iterative testing is the heartbeat of continuous optimization. It involves not just a single cycle of testing but an ongoing process of refinement where each test builds upon the insights gathered from the last. The aim is to create a virtuous cycle of improvement, where each iteration enhances user experience, drives higher conversions, and uncovers deeper insights into user behavior. To effectively engage in iterative testing, it’s vital to maintain a structured testing calendar that prioritizes tests based on potential impact and aligns with your strategic goals. Additionally, fostering a culture that values experimentation and is not afraid of failure is crucial, as not all tests will result in positive outcomes. However, each test, irrespective of its immediate success, is a stepping stone towards a deeper understanding of your audience and a more optimized digital experience.

Case Studies of A/B Testing

Exploring real-world examples can illustrate the powerful impact of A/B testing and continuous optimization strategies. One notable case study involves a leading e-commerce platform that implemented A/B testing to enhance the user experience on its product pages. By testing different elements, such as button color, product description layout, and image sizes, they identified a combination that significantly increased the conversion rate by 15%. This improvement boosted sales and enhanced user engagement, as evidenced by increased time spent on the site and a higher rate of return visits.

Another example comes from a popular news website that used A/B testing to optimize its homepage layout. The goal was to increase reader engagement and subscription rates. Through iterative testing, the site discovered that a cleaner design with fewer distractions led to a substantial increase in readers’ time spent on articles and a 10% uptick in subscription sign-ups. These changes directly contributed to the website’s bottom line and demonstrated the value of data-driven decision-making in optimizing content presentation for better engagement and conversion.

These case studies underscore the importance of adopting a rigorous A/B testing and optimization framework. By methodically applying these strategies, businesses can uncover insights that lead to meaningful improvements in user experience, engagement, and business metrics.

Conclusion

The power of A/B testing and continuous optimization cannot be overstated in the digital landscape. By rigorously applying these methodologies, businesses can significantly enhance the digital user experience, leading to higher engagement, improved conversion rates, and, ultimately, more tremendous business success. The case studies detailed above testify to the fact that data-driven decision-making can unearth powerful insights that lead to meaningful and impactful changes when done correctly. It is crucial, however, for businesses to not become complacent. The digital world is constantly in flux, with user preferences and behaviors evolving rapidly. Therefore, the commitment to ongoing testing, learning from outcomes, and adapting strategies accordingly is fundamental to staying ahead in a competitive environment. By fostering a culture that embraces experimentation, leveraging data intelligently, and continuously seeking improvement, businesses can ensure they remain relevant, responsive, and successful in meeting their strategic goals.

A/B testing is not just about changing elements on your website or marketing campaigns. It’s about evolving your understanding of your audience and how to best serve their needs. In conclusion, with the right approach, A/B testing can be pivotal in boosting conversion rates, refining user experiences, and, ultimately, growing your business.